Introducing Twin Stubs for integration test suite performance optimization

OBJECTIVE: Reduce the time needed to get feedback from integration tests.

Today, I decided to share my methodology for software testing—a journey that began, I believe, on the very first day I wrote my first unit test, which was about eight years ago at @Dolby Laboratories. At that time, I remember we had a heavy end-to-end QA test suite that took approximately 12 hours to complete. The only way to get feedback from QA was to go home, sleep, and check the results the next morning.

It’s not possible to fully introduce the technique in just one post, especially while keeping it engaging, so this is simply a brief introduction. On the other hand, it shouldn’t be too short, as it needs to provide enough context.

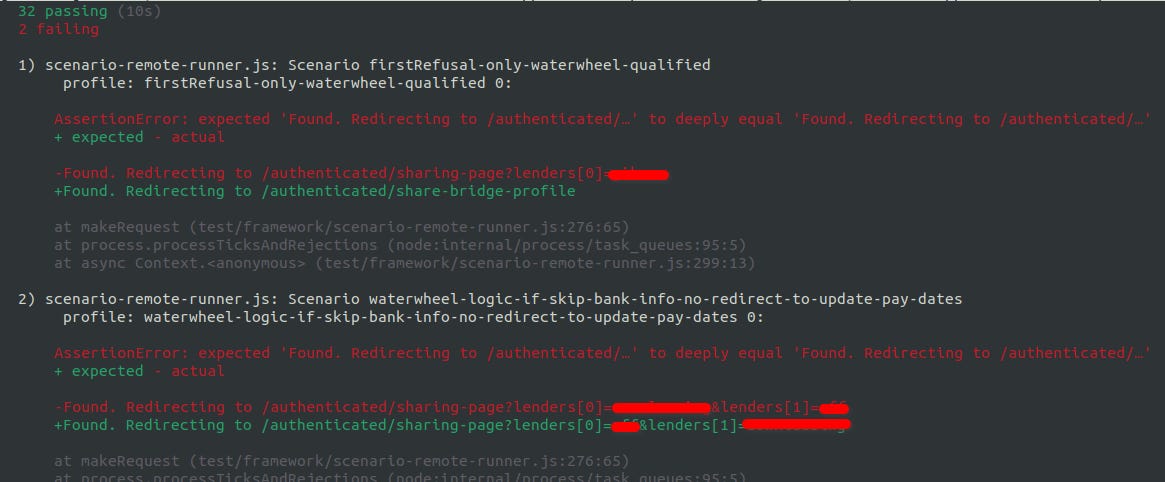

I believe it would be most interesting to start by sharing the results I achieved (which are why I think this technique could be beneficial for the community). In the attached picture, you can see the actual execution of my company's integration test suite. This includes just 64 tests out of a few hundred—this was done for experimental purposes, as the rest had not yet been migrated to the new technique.

In case it can’t be clearly seen:

First picture:

32 passing (10s)

2 failing

Second picture:

32 passing (7m)

2 failing

🔥 Using my technique, I significantly reduced the runtime of the integration test suite from 7 minutes to just 10 seconds!

🔥 When I intentionally removed one line from the production code, I got the exact same failures in both runs.

The technique consists of two concepts, which I will temporarily name Unit Test Chaining and Twin Stubs.

In short:

💡 Unit Test Chaining by Data is designed to test contracts between units. When we write isolated tests for specific units, we don't truly test the interfaces between them. That’s why we use integration tests. However, integration tests are slow.

In this concept, we use a static file to store a shared test dataset that can be used across different units. For example, if Unit B depends on Unit A (e.g., Unit X calls Unit A, takes its output, and passes it to Unit B), then the expected output of Unit A (used for assertions) becomes the input data for Unit B in our dataset.

This means that if the contract for Unit A changes (e.g., it’s an adapter for a third-party API, and the API response no longer contains a specific parameter), we need to update the expected output in the dataset. When we run the entire unit test suite, the test for Unit B will fail, indicating it relied on that parameter. After fixing Unit B, its expected output in the dataset might also change.

If Unit C and Unit D happen to depend on Unit B's output, their tests will also fail. This chain of failures will propagate through the system up to the top-layer unit tests (unless some unit in the chain no longer requires the modified property). This approach highlights what has changed and forces us to address the impact across the entire system.

💡Twin Stub is essentially a stubbed version of the original production code (e.g., a class, function, or similar) that passes all the same tests as the production implementation. A Twin can be created for any code in the system, but to achieve the optimization of the integration test suite mentioned earlier, the bare minimum is to create Twin Stubs for all remote dependency adapters in the system, such as databases, APIs, and so on. (However, it’s worth noting that for a database stub, we likely won’t implement its logic. Instead, we might instantiate the same database locally to simulate the behavior.)

The Twin and the production code are Chained by Routine, meaning that every test added for the production code must also be satisfied by its Twin Stub. The reliability of the Twin Stub depends on how thorough the tests are.

With all the Twin Stubs for our remote dependencies in place, the next step is to inject them into the system during integration tests instead of using the actual remote dependencies. This approach not only makes the test suite faster but also more stable and deterministic, as it eliminates issues such as unpredictable behavior from third-party APIs, network timeouts, and similar problems.

By doing this, you can achieve the results I shared earlier: receiving the exact same feedback from the integration test suite in just 14 seconds instead of minutes.

Employing this mechanism can be highly beneficial; for instance, it can significantly improve the Lead Time to Change DORA metric in our DevOps practice.

I hope this (relatively) brief introduction was clear enough. I’d love to hear your thoughts—do you find this useful? Would you like me to dive deeper into these concepts?