Yes, Writing Tests Slows You Down—Until It Doesn’t

How Automated Tests can improve the developer's productivity

Although the wide range of benefits of automated tests is well known, it's surprising how often, during my 10 years in software engineering, I've had to discuss how tests supposedly slow down development. After all, there's the cost of writing and maintaining them.

It seems that for reasons unknown to me, there is still a lack of understanding among many people about how tests can, in fact, improve a developer's performance.

So, considering that the many benefits of tests, as mentioned already, are widely known, I’d like to focus on this specific aspect of performance, and what's important: both from the management and developer's perspective.

You might be a developer trying to convince your manager that tests won't hinder productivity, right? And the manager might ask, "Why test it if you believe it will work?"

Or, you could be the manager, noticing quality issues, trying to convince your developer to start writing tests. And their response might be, "I don't have time for that. It's the testers' responsibility."

So here is the story of Mark:

Story 1: The Embedded Developer’s Tedious Routine

Mark worked in an IoT startup building firmware for smart thermostats. He needed to update a simple temperature threshold condition in the device’s power-saving mode.

He modified one line of code. Just one. But there was no way to test it on his laptop. He had to:

Compile the firmware. (5 minutes)

Flash the firmware onto the microcontroller. (2 minutes)

Reboot the device and run the test script. (3 minutes)

He waited, staring at the blinking LEDs on the board. The test script finished. The device crashed.

Sighing, he hooked up a debugger, stepping through the code line by line. After 30 minutes of debugging, he found the issue—an off-by-one error in the threshold check. He corrected the mistake and had to repeat the process: compile, flash, reboot, and test.

It was well over an hour before Mark could confirm the fix. Every change forced him to repeat this long cycle, increasing the frustration and eating into the time he could have spent on new features. The feedback loop was drawn out, and getting back to the task at hand felt like a never-ending cycle of manual testing and debugging.

Story 2: The Two-Week Production Nightmare

Sophia pushed a new feature live: a discount logic for the checkout flow. Two weeks later, a user reported that the discount wasn’t applying on certain cart combinations.

The support agent (Tier 1) escalated the issue to Tier 2, and they reached out to Sophia. She was now deep into a new sprint, working on unrelated tasks, but had to drop everything to investigate. Reproducing the bug took around 2 hours —an edge case no one had foreseen. After digging through code she hadn’t touched in weeks, she finally found the issue: a faulty condition that hadn’t been tested for specific cart combinations.

Another 2 hours to fix it, then deploy, test, and wait for confirmation. Each step was slower, given the context switch. The project manager was in contact with the customer, trying to manage expectations while apologizing for the delay.

It took another day before the issue was resolved. The customer was frustrated, and the feedback loop had stretched over two weeks—Sophia barely remembered the feature she had written, let alone its edge cases. The whole process had been painfully slow and inefficient, and the cost of context switching was becoming unbearable.

End of Stories (unfortunately, we all love them 😆)

I hope you enjoyed. I hope it's easy to recognize now all the performance's issues:

Slow Feedback Loops: Both Sophia and Mark faced long delays in getting feedback on changes, extending development time.

Context Switching: Both developers had to shift between tasks, slowing progress and disrupting focus.

Inefficient Manual Testing: Time-consuming manual testing (deployments for Sophia, firmware flashing for Mark) delayed issue identification and resolution.

Frustration and Wasted Time: Repeated, slow processes led to frustration and wasted hours.

Missed Edge Cases: Bugs due to untested edge cases resulted in late discovery and complicated fixes.

Customer Impact: For Sophia, slow feedback negatively affected customer satisfaction and resolution time.

Having said that, is the time spent on automated testing really wasted? Can it be considered a reduction in the productivity?

NOTE: These stories intentionally feature two entirely different developers—one in cloud computing and the other in embedded systems—to highlight that the performance issues and inefficiencies caused by slow feedback loops and manual testing are universal across different domains and can be fixed using these same techniques universally.

How can it be measured?

I've extensively used automated testing throughout my career, but like many engineers focused on delivering features, I haven't always measured quality or performance metrics in a structured way. Now, I'm actively researching how to quantify the benefits of testing, even though I haven't yet applied these measurements in practice.

For those interested in tangible benefits, I'd like to share some insights from my research.

So let's think what the desired outcomes should be:

Improving Individual Developer Productivity:

More time on implementation

Less bugs found during manual, end-to-end testing

Less time waiting for deployment pipelines to execute

Less bugs found in the deployment testing phase

Less time spent fixing bugs

Less time switching context due to bug-related interruptions

(The following diagram shows the desired transitions of the ratio of time spent on specific activities after employing the test automation.)

Improving Whole Team Productivity:

More time spent on development

Less time spent in code reviews fixing basic issues

Less time spent on bug fixing reported by the QA team

Less time spent on retesting fixes

Less time switching context due to bug-related interruptions

Improving Whole Branch/Organization Productivity

More time spent on the delivery acitvities

Less time spent on communication between Delivery and Support

Less time fixing bugs found in production

Less time switching context due to bug-related interruptions

So as it is widely known, Feature One =/= Feature Two, so the direct comparison of time spent on delivering one feature vs another is not feasible. However comparing the ratio of time spent per e.g. implementation vs bug fixing between features can now make more sense.

So based on the desired outcomes we can come up with some metrics propositions:

Metrics for Improving Individual Developer Productivity

Percentage of development time spent on implementation

(Time spent coding new features) / (Total coding time)

Higher = better.

Bugs found during manual, end-to-end testing

Number of defects found in manual testing, locally

Lower = better.

Average time waiting for deployment pipelines

Total time spent waiting for builds and deployments per developer per sprint

Lower = better.

Bugs found in the deployment testing phase

Defects found in manual testing, on deployment

Lower = better.

Time spent fixing bugs found

Total hours spent on debugging and fixing bugs

Lower = better.

Context switch frequency due to bug-related interruptions

Number of times a developer has to get back to the beginning of the implementation loop to fix a bug

Lower = better.

Metrics for Improving Whole Team Productivity

Percentage of sprint time spent on development

(Time spent on feature development) / (Total sprint time)

Higher = better.

Time spent in code reviews on basic issues

Average time spent per pull request fixing trivial mistakes

Lower = better.

Bugs reported by QA per sprint

Number of bugs QA reports after developer testing

Lower = better.

Time spent on retesting fixes

Total QA hours spent verifying bug fixes per sprint

Lower = better.

Context switch frequency due to bug-related interruptions (team-wide)

Number of times developers or testers must stop current work to handle defects

Lower = better.

Metrics for Improving Whole Branch/Organization Productivity

Percentage of total time spent on delivery activities

(Time spent on planning, developing, and shipping features) / (Total project time)

Higher = better.

Bugs found in production per release

Number of critical defects reported after deployment

Lower = better.

Context switch frequency due to production bugs

Number of times developers are interrupted to address production issues

Lower = better.

Some of them seems obvious, but to be honest how many of us really measure them?

There is, of course, more desired outcomes from employing the automated tests suite, but I want to focus on the point of the article, i.e. how it can improve the productivity instead of slowing you down.

Other Benefits

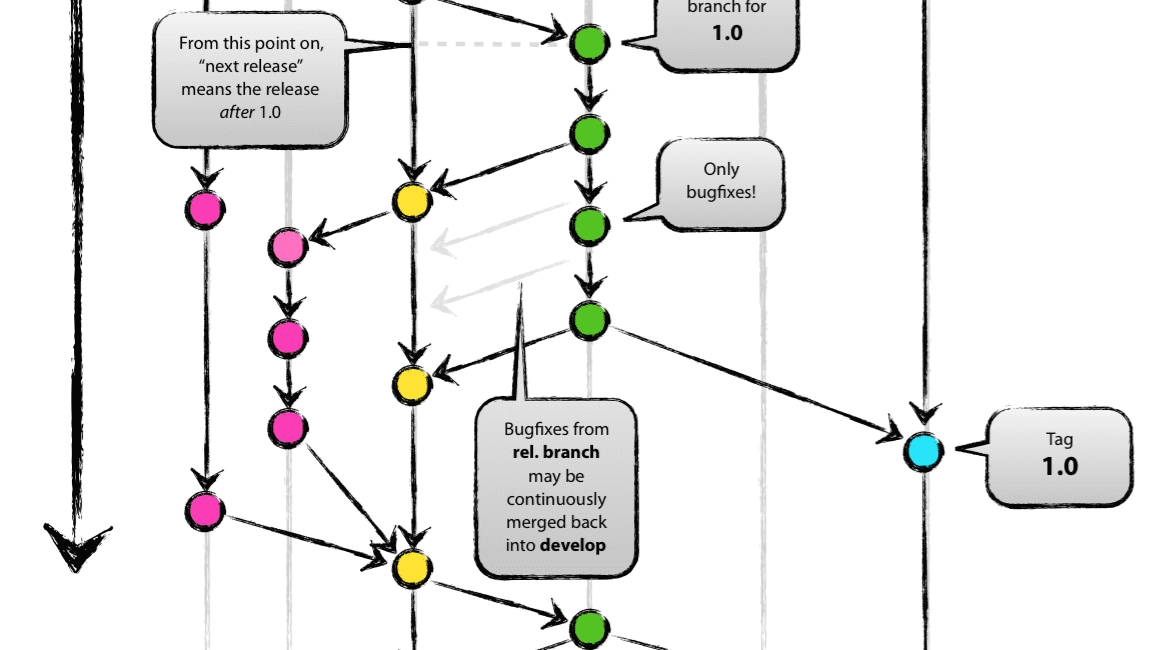

Extreme Programming and Trunk-Based Development are well-established practices that help development teams boost productivity. However, they **rely on test automation** to be truly effective. With a solid testing foundation, your CI/CD pipeline can become genuinely continuous:

Getting Started with Test Automation

Start small—there's no need to pause development to retrofit tests across your entire codebase. Instead, integrate testing into your workflow as you build new features. Adopting Test-Driven Development (TDD) can make this process smoother, helping you naturally grow your test coverage over time.

Want to dive deeper? Subscribe for upcoming articles where I'll break down best practices for effective test automation.